Download the PDF version of this whitepaper!

Despite using various measurement frameworks, many engineering leaders fall into the trap of measuring what's easy rather than what's meaningful.

Most measurement approaches treat engineering as a purely technical practice. They assume you can optimize it through technical metrics alone. But engineering organizations are sociotechnical systems. Human collaboration, communication patterns, and environmental factors matter just as much as code deployment statistics.

Engineering organizations already have plenty of data. What they lack is a clear framework to interpret that data and drive meaningful change.

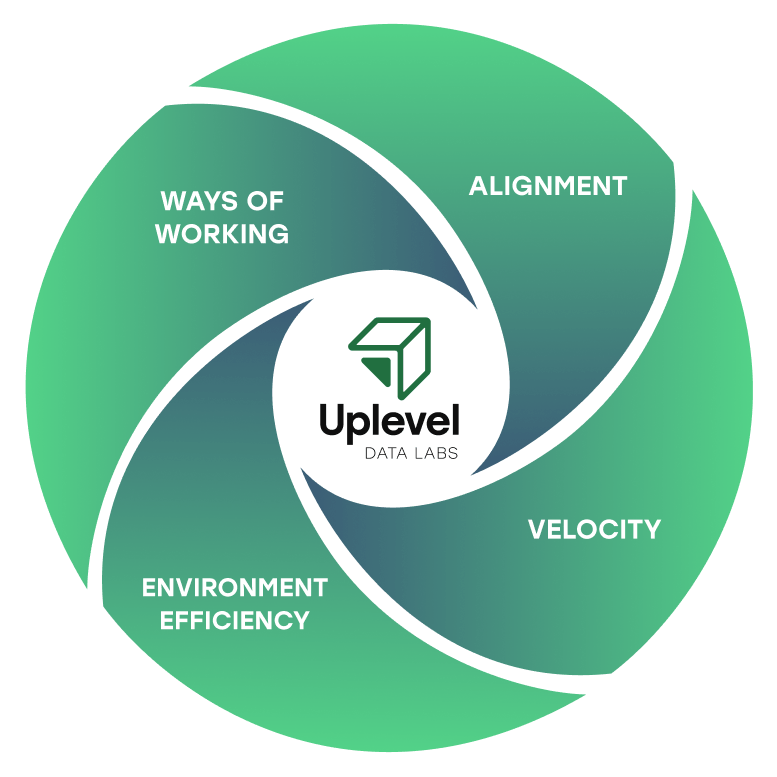

Uplevel's WAVE Framework can transform your engineering metrics from mere measurements into actionable insights that drive real improvement.

What’s the problem with traditional engineering KPIs?

Many organizations collect metrics without understanding what they're trying to achieve. Traditional KPIs often create an illusion of control. They fail engineering leaders in several ways:

- Too much focus on individual output: Engineering leaders often track metrics like PR counts, number of bugs squashed, or story points completed. But these metrics don't accurately reflect productivity. They can lead to harmful behaviors like artificially inflating PR sizes or submitting unnecessary code changes.

- Overreliance on lagging metrics: Frameworks like DORA give you valuable insights, but these are backward-looking measurements. For engineering leaders under pressure to improve future performance, understanding that deployment frequency was low last quarter offers limited guidance on what to change now.

- Research has shown that team collaboration patterns are often stronger predictors of success than individual technical skills. Yet most organizations focus only on technical metrics while ignoring team dynamics.

- Little correlation between metrics and business value: Many organizations measure what's easy to track rather than what drives real business outcomes. As a result, they optimize for metrics that don't meaningfully impact the organization's success.

- Limited ability to act on the data: Research by Dr. Nicole Forsgren (co-author of Accelerate) shows that without context about organizational structure, team interactions, and environmental factors, technical metrics alone aren't enough to diagnose performance differences across teams.

These limitations leave engineering leaders with plenty of data but insufficient guidance on what to change.

Unlike frameworks that focus narrowly on deployment statistics (DORA) or that provide theoretical models without clear measurement approaches (SPACE), WAVE addresses the full spectrum of factors that influence engineering effectiveness. Most importantly, it recognizes that engineering KPIs are interconnected. Improvements in one area cascade through the entire system.

WAVE is based on our data science findings and deep experience partnering with engineering leaders. Each category below offers a small group of dimensions and metrics that provide opportunities for actionable intervention. WAVE provides manageable clarity while still addressing the complexity of a sociotechnical system.

The WAVE Framework consists of four interconnected components:

-

Ways of Working (W): Measures cultural elements that enable delivery

-

Alignment (A): Captures how well engineering efforts connect to business value

-

Velocity (V): Tracks the flow of work through the system

-

Environment Efficiency (E): Evaluates system quality and friction

Each dimension of WAVE is summarized by a key engineering metric — a lagging indicator in that area. This metric is an outcome of the inputs that enable good engineering, which are leading indicators. These leading indicators for performance are captured in the lower section of the table below.

For example, improving engineering team health KPIs like psychological safety, meeting cadence, and mission alignment will naturally result in less overwhelm and more time developers spend in deep work. The same applies to improving AI perception inputs like guideline clarity and best practices.

Instead of treating engineering KPIs in isolation, WAVE recognizes the connections between different aspects of engineering work. Besides coding, engineering consists of all your team's interactions with the product, users, and cross-functional teams.

The WAVE Framework creates a diagnostic map that helps engineering leaders understand the relationship between different dimensions of performance. This enables targeted improvements rather than isolated optimizations.

Deep work

Deep work metrics track the average number of daily uninterrupted hours developers can dedicate to focused coding time. Cal Newport's Deep Work shows the importance of uninterrupted focus for complex cognitive tasks like software development.

This concept is further supported by studies from the University of California. They found that after an interruption, it takes an average of 23 minutes for knowledge workers to return to their original task. For software engineers, context switching is particularly costly. Frequent interruptions lead to increased defect rates and longer completion times for complex programming tasks.

Team health

Team health metrics provide a consolidated view of engineering teams' psychological safety, collaboration effectiveness, and overall engagement. This approach is grounded in Google's Project Aristotle research, which identified psychological safety as the most important factor in team effectiveness.

In software engineering specifically, a 2024 study in Empirical Software Engineering found that teams with established psychological safety were more invested in software quality. The study showed these teams demonstrate "collective problem-solving, pooling their collective intellectual efforts and experience to tackle quality-related challenges."

When you track team health over time, you can identify early warning signs of burnout, disengagement, or collaboration challenges before they impact delivery.

AI Perception

AI maturity captures organizational readiness for artificial intelligence adoption through standard operating procedures, tool coherence, and leadership clarity. As AI performance compounds at 5x every two years (Huang's Law), understanding AI's impact on ways of working becomes essential for organizations seeking competitive advantage.

Organizations and teams with high AI maturity establish clear SOPs for tool usage. They identify best practices and recommended use cases. They ensure compliance and security, maintain coherent toolsets, and provide clear leadership direction about AI strategy. This creates effective ways of working where the focus remains on value creation rather than navigating organizational confusion. Teams with low maturity experience organizational drag. They waste time on tool decisions and worry about compliance rather than leveraging AI for productivity gains.

Allocation of effort

Resource allocation metrics track the actual distribution of engineering effort across new value creation, technical debt, and maintenance work. Unlike self-reported time allocations, data-driven measurements provide an objective view of where engineering time is actually spent.

In most organizations, developers believe they spend more time on new features than they actually do when their work is objectively analyzed. Our own research puts the average time spent on new value creation at just under 20%. That's one day out of five.

Planning Effectiveness

Planning effectiveness reflects how well teams understand their work, capacity, and alignment with evolving priorities. It measures requirements churn, clarity of prioritization, connection to business value, epic lead time, and plan phase duration.

When teams consistently deliver what they commit to, it suggests a healthy balance between ambition and realism. Stable requirements indicate clarity in what needs to be built. This minimizes churn and rework that delay value delivery.

As always, however, context matters. These metrics should not be treated as success criteria on their own. A high sprint completion rate, for instance, could mask underlying issues if teams are playing it safe by undercommitting. It could also hide problems if they're delivering work that's no longer relevant due to shifting priorities.

Instead, planning effectiveness is a signal to detect misalignments in team capacity, requirement clarity, or cross-functional communication. When planning metrics fluctuate significantly, it may indicate that teams lack the information or autonomy needed to make reliable commitments. This can delay or derail the delivery of customer value.

How Braze Sustains Continuous Value Delivery

"Leadership is making the invisible visible."

User alignment

Uplevel's user feedback cycle score measures how quickly teams receive and incorporate user feedback after releasing features. It tracks frequency and type of user engagement, user feedback cycle time, and customer satisfaction scores.

Short user feedback cycles are a leading indicator of engineering alignment to value. They create a continuous loop of validation between what is being built and what users actually need. When feedback is rapid and frequent, teams can confirm whether their work delivers meaningful outcomes. This enables faster course corrections.

We find this is one of the most underrated metrics. If your team doesn't get feedback or gets it too late, information is probably getting locked between departments.

Velocity score

Uplevel's velocity score combines PR cycle time, PR velocity, issue velocity, and deployment frequency (where available) into a comprehensive throughput measurement. This consolidated view reveals whether teams can consistently deliver completed work rather than just generate activity.

When evaluating velocity metrics, avoid comparing teams against each other. Teams operate under different contexts — varying codebases, workflows, review cultures, and priorities make cross-team comparisons misleading. Instead, compare each team's current performance against its own historical baseline to identify genuine improvement opportunities.

The most valuable insights emerge from team-level aggregation rather than individual tracking. This shifts focus toward systemic improvements that benefit collective velocity and collaboration.

.png)

Handoffs

Handoff metrics evaluate both frequency and quality of work transitions between teams, individuals, or process stages. Each handoff introduces coordination overhead and potential communication gaps that slow delivery and increase error rates.

Research shows that minimizing handoffs through cross-functional teams can result in significant improvements. One McKinsey study documented a 45% decrease in code defects and 20% faster time to market after switching to cross-functional teams that reduced coordination dependencies.

Most organizations underestimate handoff costs because the delays appear as waiting time rather than active work. This makes them invisible in traditional productivity measurements.

.png?width=768&height=288&name=Infographics%20Frame%20(1).png)

Pull request reviews

Pull request review metrics examine PR complexity, PR quality, and review quality/time to assess both work structure and collaborative effectiveness. This measurement reveals whether teams create reviewable code changes and conduct meaningful peer evaluation.

PR complexity tracks oversized changes that create review bottlenecks. Large PRs are harder to review thoroughly. This increases defect rates and cycle times. PR quality measures whether changes include proper descriptions, link to tracking systems, and maintain reasonable cycle times.

Review quality evaluates whether the collaborative process catches meaningful problems. Effective reviews identify functional defects and architectural issues when remediation costs are lowest. They don't just catch style preferences that automated tools can address.

Teams with high PR quality and effective review processes ship faster with fewer production issues. Those with poor practices accumulate technical debt and spend more time fixing downstream problems.

Recovery

Recovery metrics combine lead time for changes, change failure rate, and mean time to repair (MTTR) — three of the four DORA metrics — to assess system resilience. These measurements reveal how quickly teams can deploy fixes and maintain stability under operational pressure.

Organizations with faster recovery capabilities demonstrate robust testing, monitoring, and deployment automation that enable rapid issue detection and resolution. The 2023 DORA report specifically highlighted that elite performers excel not just in deployment metrics. They also build cultures that support sustainable delivery.

Code quality

Code quality consolidates bug rates, customer-found defects, cyclomatic complexity, and support escalations into integrated quality assessment. This recognizes that quality issues compound. High complexity increases bugs, driving support escalations and customer-found defects.

Detecting defects earlier in the development process reduces the cost of remediation by orders of magnitude. Defects found in production can cost 100x more to fix than those found during code review. This makes upstream quality investments essential for sustainable delivery.

Friction and flow

Friction measures systemic obstacles through architecture complexity, tooling effectiveness, deployment processes, and flow optimization. These factors determine organizational drag that slows delivery regardless of team capabilities.

In knowledge work, including software development, items typically spend 70-85% of the time waiting rather than being actively worked on. This means a flow efficiency rate of just 15%. This represents massive efficiency opportunities most organizations ignore because waiting appears as white space rather than visible inefficiency.

Implementing the WAVE framework doesn't stop at collecting better metrics. The real change lies in how engineering organizations understand and improve. Sustainable transformation requires both measurement systems and enabling mechanisms for improvement.

“Having data helps the conversations I have with teams. 'You didn't work on these goals this quarter. Why was that? What can we do to increase the time you're delivering value?' Then we can take action. So that's a lot of the work we're doing with Uplevel.”

Francisco Trindade, VP Engineering at Braze

As you consider your own organization's effectiveness, ask yourself: Do you have visibility into all four WAVE dimensions? Can you identify which dimensions currently limit your performance? And most importantly, do you have a methodology to turn those insights into sustainable improvement?

As engineering systems grow more complex, the organizations that succeed recognize effectiveness as an ongoing practice. It requires attention to the technical, social, and environmental realities of how teams work. When engineering leaders shift from isolated metrics to the integrated WAVE approach, they transform measurement from a reporting exercise into a powerful catalyst for sustainable improvement.

Ready to assess your team's engineering effectiveness?

Schedule a demo today and find out how leaders use Uplevel to engineer top engineering organizations.

.png)