The "DORA metrics," developed by Google's DevOps Research and Assessment team, emerged from a five-year study of 31,000 developers. This research, published in the 2019 State of DevOps report, introduced four key metrics for software delivery performance. A fifth was added in 2021. The DORA metrics have become one of software development's most prominent frameworks. In fact, many organizations who use DORA consider it synonymous with these key metrics.

The problem? The DORA metrics only tell half the story.

Today, forward-thinking companies, DevOps experts, and even DORA team members are pulling back on the reins. It’s not that the five metrics are wrong — it’s that they measure efficiency more than performance. But efficiency is only one part of the DORA Core Model that looks at engineering effectiveness in context.

DORA Engineering Metrics: What Are They and What Do They Measure?

The DORA team outlines five DevOps metrics that fall into three main categories:

Throughput (velocity) metrics

Throughput metrics indicate delivery speed, presenting a way to measure velocity outside of arbitrary measurements like story points.

Lead time for changes indicates the amount of time between code commit and deployment to production. Are automated tests and a healthy CI/CD pipeline reducing the time a pull request sits in queue? Is there a healthy code review process for what must be done manually?

Deployment frequency quantifies how often teams are successfully releasing code to production. Best practice recommends that the total number of deployments should be high. Deploying to production more frequently is a byproduct of streamlined processes and less complex PRs, which indicate healthy code reviews and more opportunity to catch errors.

Stability (quality) metrics

Change failure rate captures the percentage of deployments that require remediation. How much of the time was there a failure in production that needed a hotfix, patch, or rollback?

MTTR (mean time to repair) is the average time it takes to restore service when an incident occurs. The objective is to restore as soon as possible, ideally before users are affected. When software can be repaired quickly, that’s a sign of healthy architecture, incident response systems, and a well-coordinated software development team.

Reliability

Reliability is often overlooked (you'll still see many references to the "four" DORA metrics), but was added later as its own category and metric. It measures operational performance: your team's ability to keep promises about the product they're building. This might be captured in artifacts like SLAs and error budgets.

Elite performers maximize speed and output when it comes to deploying functional code. But what if engineering teams are focusing on the wrong priorities or burning out to meet production demands? What if they're jeopardizing their ability to ship functional code in the future? Are they still high-performing teams?

What Software Efficiency Metrics Can’t Tell You

At Uplevel, we look at DORA metrics — but we look at them as part of a larger picture. While they focus on team efficiency, they don't reflect effectiveness.

We define effectiveness as working on the right things in the right ways. This means aligning teams around the right priorities and giving them time to work on them. With increasing responsibility on engineering leaders to deliver business outcomes, the metrics themselves are a slice of a much broader conversation. It doesn't matter if you're moving faster if you're not moving in the right direction.

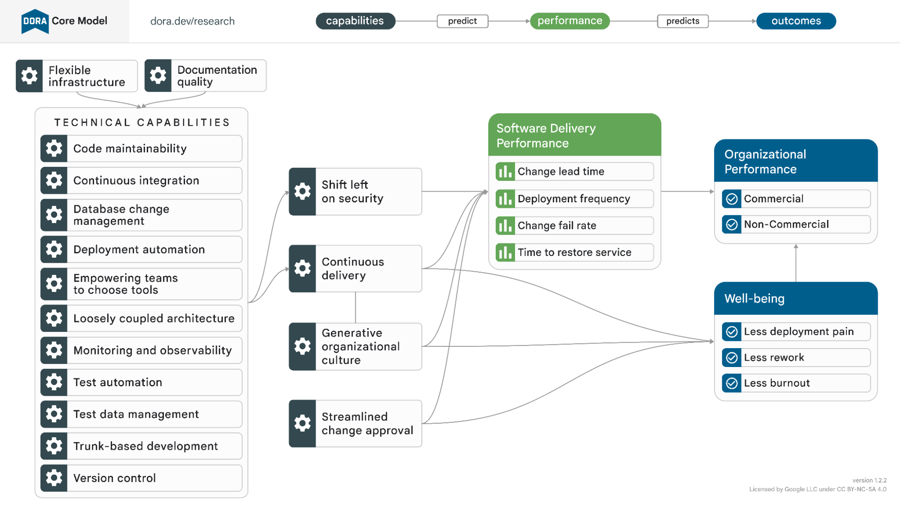

The DORA DevOps team acknowledges this larger context in their complex Core Model. The original DORA team also introduced the SPACE Framework in 2021 to focus on developer well-being (though it lacked distinct metrics). The 2023 State of DevOps report emphasized the importance of developer well-being to avoid burnout. The 2024 report examines the potential impact of AI on value delivery, developer sentiment, and business outcomes, as well as productivity.

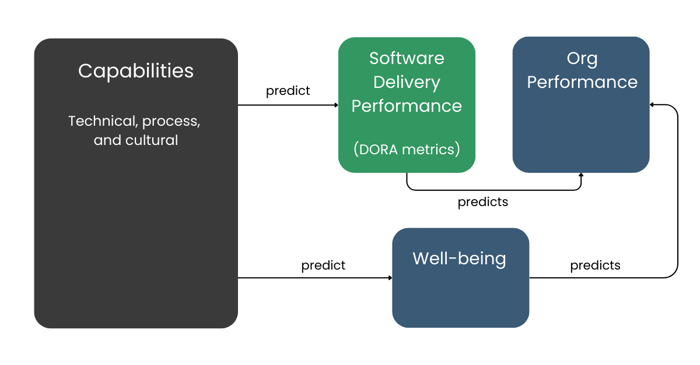

Let’s come back to the DORA Core Model, now simply represented like this:

There are a few things to notice here (beyond the fact that the metrics are actually just a part of the whole picture):

-

DORA metrics are lagging indicators. They will give you a picture of delivery performance, but they won’t explain any of the context behind it. You'll need more context to know which capabilities are strong or lacking in your organization.

-

They don’t capture well-being, which is both an outcome of your capabilities and a predictor of organizational performance.

Why don’t classic software engineering metrics attempt to capture problems early? Given well-being's role in predicting organizational performance, why aren't team health metrics included?

Because people data is difficult to collect and interpret.

Outside of surveys, how can teams measure “well-being,” “burnout,” or “work recovery”? Available data is evolving, as is our ability to surface insights leading to high-value outcomes.

How to Measure Engineering Effectiveness Holistically

While the State of DevOps reports rely on self-reported survey data, engineering intelligence solutions offer telemetry from developer tools to quantify team performance track trends over time, and facilitate organizational improvement.

No framework for understanding engineering performance is perfect. Capturing the nuance and complexity of effectiveness across people and processes is difficult. People data is messy, after all. But advanced ML models and composite metrics now offer greater visibility into the larger picture outlined by the DORA team in the Core Model.

At Uplevel, we've found that engineering leaders struggle with three often competing mandates. DORA metrics measure delivery efficiency and quality, but not whether they are the right initiatives or whether teams are working sustainably.

Measuring efficiency is critical for our customers. Uplevel measures the four DORA metrics to assess delivery, throughput, and stability. But if DORA is not the whole story, what other insights do we surface — and why?

Allocation Insights

A team working on quick, low-priority deployments may appear more effective than one working slowly on a complicated feature.

With DORA metrics alone, you might see that your teams are deploying quickly and frequently and infer they have more capacity. The problem? You have no way to quantify it.

For example, if you’re measuring deployment frequency in weeks, how much of that time did your teams actually spend on delivery? If their days were packed with meetings and other interruptions, they may not have had the capacity to deploy tons of new code. In that case, low deployment frequency may not accurately depict team efficiency. They may have performed at a high level given their lower capacity.

That’s why aligning engineering efforts with business priorities and value delivery is crucial.

Uplevel's allocation insights reveal how much time your organization spends on new value demand work vs. KTLO. By understanding how teams spend their time, you can effectively distribute effort to impactful activities and better estimate delivery timelines.

This visibility helps align engineering with other business functions, surfacing metrics that support DORA’s goals of creating a transparent value stream. We measure how much time teams have for deep work, which we define as uninterrupted blocks of at least two hours. Our deep work metrics account for the planned and unplanned interruptions like meetings and chat throughout the day.

Allocation and capacity insights are a measure of organizational focus. What are your teams working on? How much time do they have to work on it? Viewing your engineering efforts through this lens can give you a more accurate idea of effectiveness and performance.

How Accolade Meets Commitments with Uplevel

20% increase in deep work and 205% increase in deployments

Work Sustainability and Well-Being

Developer burnout is a significant issue, highlighted in every DORA report since 2019. Rightly so. It doesn't take a team of researchers to figure out that overworked, unmotivated employees threaten organizational performance.

But the DORA metrics don't include burnout risk. It's difficult to quantify well-being and link it to objective performance. But waiting for performance measures to decline before recognizing burnout risks isn't helpful. True measures of engineering performance should account for burnout early, spurring leaders to action before DORA metrics drop.

To quantify burnout risk at the team level, Uplevel uses composite metrics. Sustained Always On combines low deep work time and “Always On” behaviors (Jira, messaging, and calendar activity beyond normal hours). These metrics provide context for understanding efficiency and sustainability over time.

AI-Powered Context for DORA Metrics

DevOps efficiency and effectiveness metrics enable enterprise companies to deliver value in a tough market. Allocation and burnout risk are important to the larger context, but they're often missing because they are difficult to capture.

Thankfully, you no longer have to go without.

Modern engineering leadership goes beyond homegrown BI platforms or quarterly dev surveys. Leveraging data science best practices and people health capabilities, Uplevel provides you with the comprehensive team telemetry you need to turn engineering data — including DORA metrics — into actionable change.