Everyone is feeling FOMO about AI. And for good reason: The risks of missing out are real.

But FOMO is a dangerous frame of mind. Adopting AI merely means you haven’t missed out. It doesn’t mean you’ve actually used AI to its full extent, or done all you can to prepare your company for the technology changes to come.

Achieving transformational gains requires an approach beyond adopting AI and throwing it at problems. Learning to measure is the best way to position yourself for AI today, and it’s the best way to position yourself for every subsequent technology wave.

AI adoption is table stakes

When ChatGPT launched in 2020, the potential of generative AI was apparent. There were a handful of skeptics, but few tech leaders thought it would be a fad.

In the years since, the nature of generative AI’s change has clarified. So far, we’ve seen a surprising lack of company-level disruption. As Byrne Hobart writes, “The list of big tech companies that are winning in AI is pretty close to the list of big tech companies that were previously winners in mobile.”

There are new entrants, such as OpenAI and Anthropic, and legacy companies using AI to get back into the headlines, such as Microsoft. But so far, most of the disruption will likely be within companies. This means AI likely won’t be the kind of technology wave, like the cloud or mobile, where adoption alone could place you well ahead of other companies.

Leaders who adopt AI now won’t have anything to brag about later; everyone knows some level of adoption is necessary. Research is bearing this out: AI adoption is already an expectation, not a pioneering decision.

Uplevel’s own developer surveys across enterprise engineering companies reveal that 85% of developers use AI on a daily or near-daily basis.

That said, a contradiction belies this widespread adoption: Companies consider themselves ready for AI despite having few signs they really are.

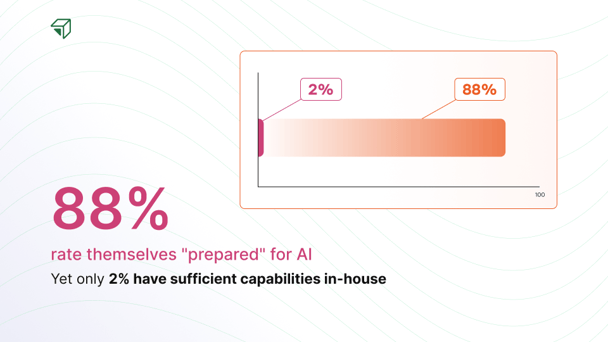

In The AI Measurement Crisis, our survey of over 100 engineering leaders about AI measurement, the results show that 88% of engineering leaders say they're "AI ready,” but only 2% have sufficient capabilities in-house. This is like claiming you’re ready to win the race before you even get running shoes.

Leaders aren't blind to this tension. The contradiction stems from what I call preparedness theater: claiming readiness to satisfy boards, investors, and colleagues without the psychological safety to admit otherwise.

In reality, most people aren’t ready for AI but can’t admit it. Or, more generously, they misunderstand what it means to be ready. Buying Cursor licenses takes minutes — but can they measure whether AI actually drives business outcomes? Can they even measure inputs and outputs to see what drives business outcomes in the first place? Our research shows the answer is no.

Why traditional engineering metrics fail for AI

Let’s start by repeating a mantra we all know, even though few of us really apply it well: What gets measured gets managed. Yet most engineering leaders lack the AI metrics needed to understand whether their adoption efforts are actually driving value.

Most companies, if they measure AI impact at all, measure metrics that can only signal incremental gains. As a result, these companies can see only narrow benefits and pursue limited opportunities. It’s a garbage-in, garbage-out, dynamic: Measure minutiae, get minimal results.

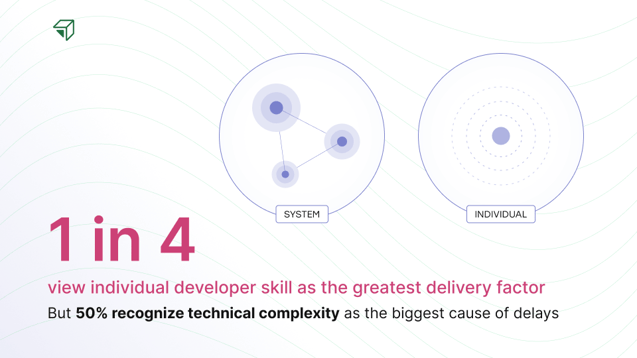

In The AI Measurement Crisis, this preference toward the minutiae is clear. 36.8% of respondents in our study still believe that high-performing individuals create high-performing teams. Similarly, 23.2% still rate individual developer skill as the biggest delivery factor.

We see a similar mistake in productivity discussions. 35% of respondents believe individual and system performance are equally important, yet 50% cite technical complexity as the top cause of delays. The contradiction is stark: if complex systems slow us down, why do we still prioritize individual performance?

These results reveal a consistent pattern: companies prioritize individual productivity over collaboration and performance over system health. Only 4.2% believe system performance matters more than individual performance.

There’s an issue of revealed preference: Companies might talk about transformation, but their priorities show a focus on individual metrics and incremental gains.

To be clear, measuring and achieving incremental gains isn’t a bad thing, but they’re limiting, and accepting limitations, especially at this stage, can be dangerous to your future.

- If you use AI to decrease toil and tech debt, you might see your implementation as a net positive, even if, in the background, your organizational debt grows.

- If your developers adopt AI coding tools, you might focus on how your individual performance metrics improve, even as your system performance worsens.

- If you adapt to the AI era, you might feel ready for the future even as you remain unprepared for the next big technology wave.

Zoom out and the stakes become clearer. We're facing rising VUCA (Volatility, Uncertainty, Complexity, and Ambiguity) every year. Paying down debt once without changing spending habits means you'll slide right back into debt — whether that's technical, organizational, or financial.

Without measurement, you can't change habits. Without changing habits, you're stuck taking one step forward and two steps back.

The right AI metrics transform adoption into business value

AI is not magical pixie dust that you can sprinkle on everything. You can’t sit back and watch it work. Most companies, however, appear to be doing just that.

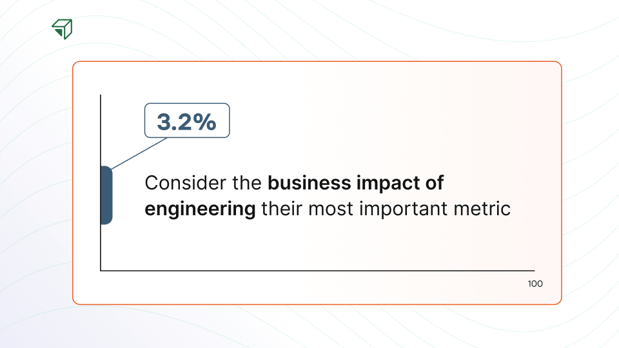

In our research, only 3.2% of respondents considered the business impact of engineering deliverables to be their most valuable metric for improving overall engineering performance. Without AI metrics tied to business outcomes, teams struggle to distinguish between adoption theater and genuine transformation.

This measurement, or lack thereof, makes adoption harder. When you measure performance tied to outcome, you can go full steam ahead instead of splitting your focus. When you don’t, you can only hope for the best. You should be able to determine what isn’t working, what is working, and what could work.

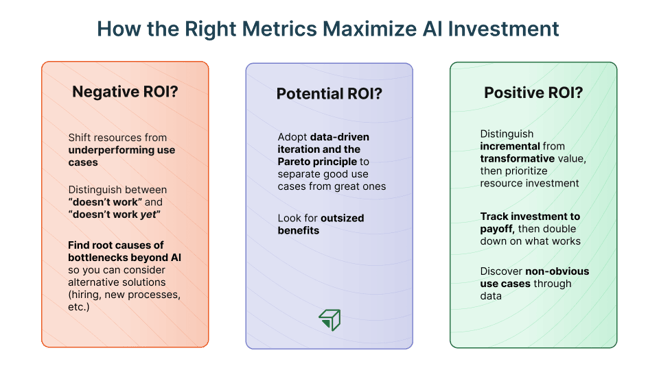

First, which use cases show negative ROI? AI is broadly but not universally beneficial. With the right metrics, you can:

- Identify use cases where adopting AI delivers lower business value, and shift resources from underperforming use cases to ones with demonstrated ROI.

- Distinguish between use cases where AI doesn’t help and ones where AI doesn’t help yet, allowing you to wait until model development reaches the point you need it to.

- Find use cases where hiring more people, reorganizing your team, or implementing a new process, such as test-driven development, could yield greater benefits. Finding the root cause is more important than adding AI anywhere and everywhere.

Which use cases show positive ROI? Many departments and functions can use AI in different ways, but the level of business value they derive can vary. With the right metrics, you can:

- Distinguish between use cases that create incremental value and transformative value, and then prioritize resource investment.

- Discover use cases that wouldn’t be obvious otherwise by measuring how investments correlate with payoff – enabling you to double down.

- Identify new use cases that go beyond applying AI to old processes, scenarios that use new technologies to do new things.

Which show signs of positive ROI (given the right investment)? Following the Pareto principle, a majority of consequences will likely come from a minority of causes. As a result, you should be looking for use cases that aren’t just good but great.

With measurement, you can iterate across use cases and eventually identify – and focus on – use cases that will deliver outsized benefits. These are the use cases that can lead to transformation.

Organizational change is hard, but identifying the use cases with outsized benefits is a necessary precondition to implementing, scaling, and ultimately changing.

Why the right metrics prepare you for every technology wave

The initial skepticism around generative AI was wrong, but it did have some substantive precedent: Web3.

For a brief period, we were told that Web3 would change everything, but after the fall of FTX and the launch of ChatGPT, it became clear which technology would transform the world and which would affect a niche.

This same pattern will continue to play out, and building the capacity to measure today is the best way to ensure you can test new technologies, reject ideas that don’t work for you, and confidently pursue ideas that demonstrably move the needle – ideas that deliver provable, significant business outcomes.

Test and validate any new technology systematically

Measurements tied to narrow signals, such as development velocity, will be limited by the technologies in question. But measurement tied to business value will be technology-agnostic, allowing you to adopt new processes, tools, and paradigms as they emerge. You can test what works and what doesn’t.

For example, in our work with Avalara, a tax compliance platform, we helped them evaluate GitLab as a unified DevSecOps platform, allowing them to prove the value of infrastructure investment before implementation. With Uplevel, Avalara was able to build a continuous feedback loop to sustain improvements long after adoption.

How Avalara Quantified GitLab Migration ROI with Uplevel

Be prepared for big and small changes

AI, as a whole, is a change every company needs to react to, but not every use case and permutation of AI will be obvious. An incremental benefit for one company might be a significant one for another.

The same pattern applies outside of AI and other hyped technologies. A tool that speeds up security reviews might, for example, provide a small benefit to a fast-moving startup and an enormous benefit to an enterprise-level fintech. Without measurement, the incremental gains that can accumulate into significant gains can remain invisible.

Zoom out on systems-level problems

Without measurement, teams are liable to throw AI at all their problems and hope it helps. This is costly in many ways and yields incremental gains at best.

With measurement, teams can test where AI works best, scale up, and measure where bottlenecks in their systems limit AI’s cascading impact. Teams can then measure their systems before, after, and during AI, and address real bottlenecks (e.g., testing) rather than perceived ones (e.g., coding speed).

Let data reveal what you couldn't predict

Until teams learn to ask different questions, they’ll keep getting the wrong answers. And without measurement, it’s difficult to find new questions – you’re flying in the dark.

That’s the beauty of data. With measurement, teams can work like scientists and gather new data that either supports or contradicts old theories. The data can then provoke new questions and lead teams to discover new ways of understanding their work and its results.

From adoption to impact: the measurement imperative

Good news: The industry, on the whole, will not miss AI. Bad news: The industry is on track to miss AI’s full potential.

Incremental gains are better than no gains, but these small benefits risk motivating companies to focus on the short term — on what they can observe now — rather than building the AI metrics infrastructure needed to capture long-term transformation.

The biggest benefits lie beyond what we can see today. We can glimpse them if we take the time to learn how to measure and, more importantly, learn how to learn.

Amy Carillo Cotten

Amy Carillo Cotten is Director of Client Transformation at Uplevel. With 12+ years of technology industry experience as a change consultant and program manager, she works directly with engineering leaders and their teams to increase growth, reduce risk, and maximize innovation.