Uplevel Security and Implementation

Uplevel is a tool that processes limited data you approve from tools your engineers use every day and computes actionable insights teams can use to meet their performance, effectiveness, and team health goals.

Uplevel analyzes sanitized employee collaboration behavior from sources such as Slack, Calendar, org charts, etc. to provide insights to engineering teams. Data is first collected on-prem in the client's environment to provide full transparency and auditing of all data that is made available to Uplevel. Only data that is approved and sent to Uplevel is used, and no direct connection between Uplevel servers and client data sources is required. For more information, see our latest SOC 2 Type 2 Audit Report.

See how data is processed through the Uplevel platform:

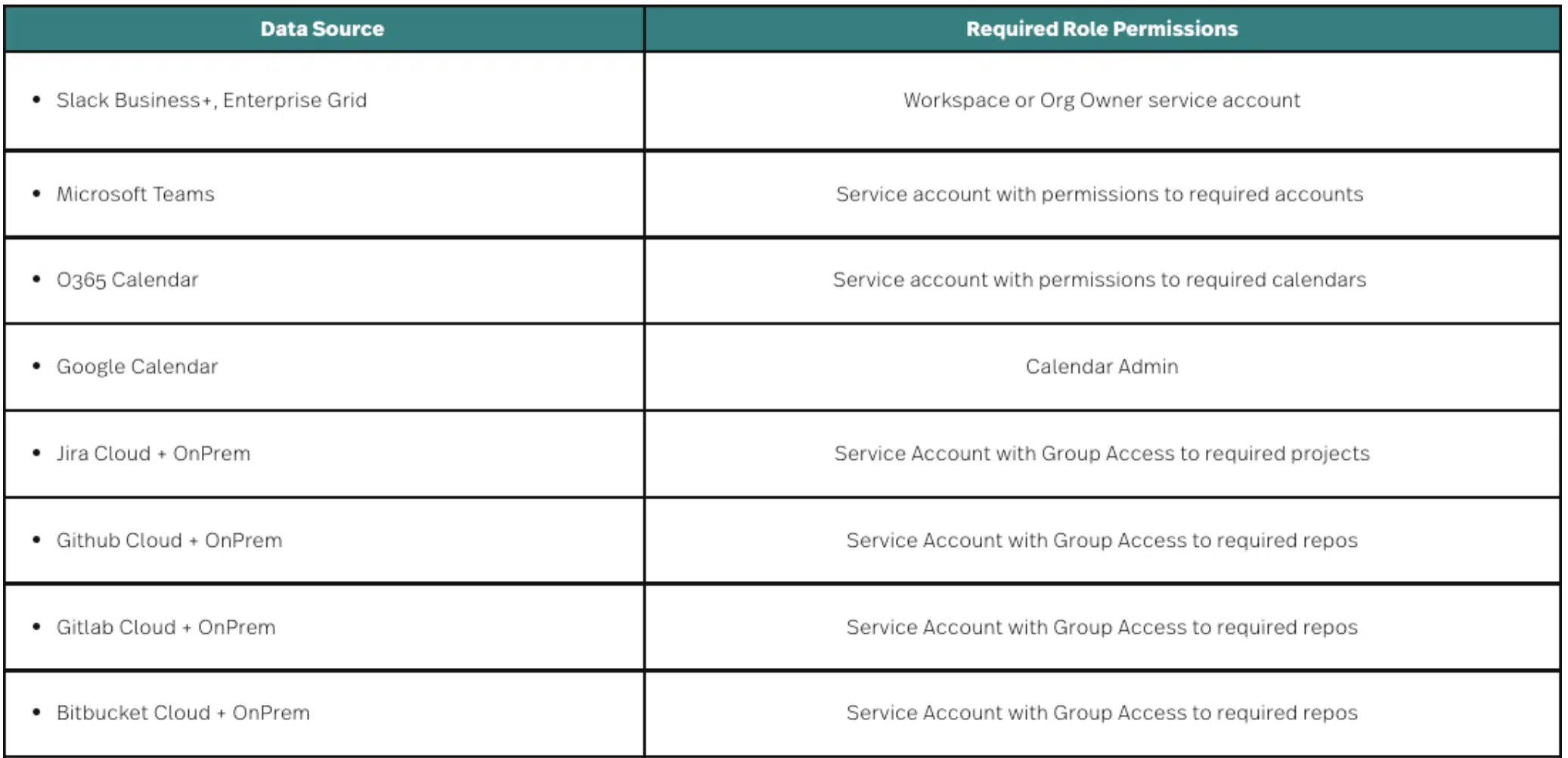

Uplevel Integrations & Permissions Overview

Uplevel recommends using a service account to set up each integration. All credentials are encrypted using HashiCorp’s Vault. Uplevel does not have access to these credentials.

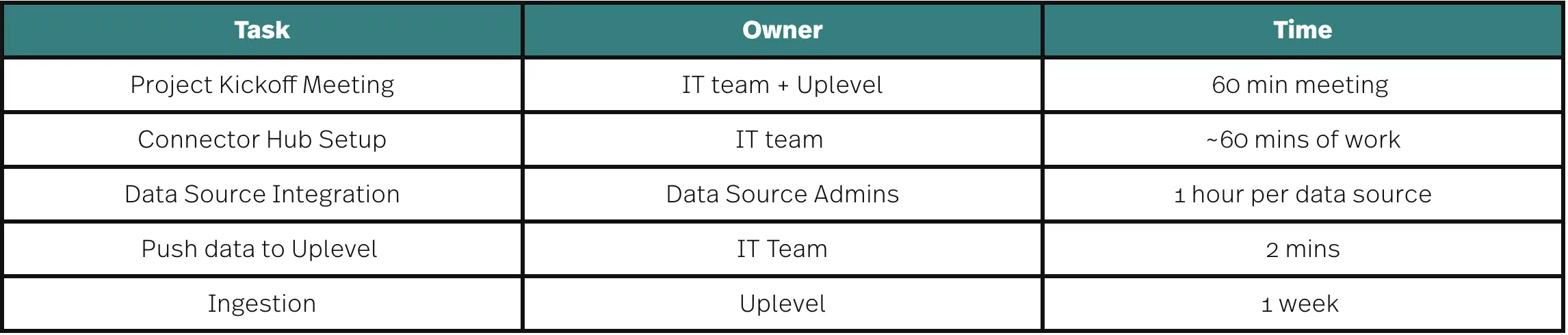

Timeline

The below diagram provides a timeline for each step of the implementation process. Once initial setup is complete, the Uplevel data science team will work on processing the data to compute insights which takes about one week. Getting the integrations set up and the data flowing is an important first step and we look forward to partnering closely with your team to complete this crucial part of the process.

Step 1: Project Kickoff Meeting

During this call, we’ll review the installation process in detail and answer any specific questions about the architecture. We will also review any outstanding security concerns. Typically, we have the following people attend this meeting:

- IT leads owning this project

- Data source owners (Chat, Calendar, Jira, Version Control)

- Security team members responsible for reviewing the Uplevel platform

Prior to the meeting, please fill out this form so the Uplevel team can get a better understanding of your data sources.

Step 2: Connector Hub Setup

This part of the process is owned by the IT lead. It involves setting up a VM and installing the connector hub on that machine. We have two options to do this:

- AWS Cloudformation Script

- Shell Script

Both of these options are outlined in the Implementation Handbook below. Additionally, there is the option to install a sensitive redaction script which looks for PII and PHI. Please reach out to the Uplevel team for more information about this.

Step 3: Data Source Integration

Once the Connector Hub is installed, the next step would be to connect each data source that will be used for the analysis. Uplevel recommends that a service account be set up for each integration. Details about permissions needed can be found in the Implementation Handbook.

Step 4: Push Data to Uplevel

Once the data sources have been connection, there is the option to review all of the redacted JSON outputs prior to sending data to the Uplevel team for processing. After approval, automatic data uploads can be enabled in the Connector Hub settings page. If any sensitive information made it through redaction, please let the Uplevel team know and we can work on installing additional RegEx to identify and remove specified strings.

Step 5: Ingestion

Once data is flowing, the Uplevel data science team will work on processing it and computing insights. This process typically takes about a week. No action is needed during this time.

Documentation

- Redaction Process and Example

- SOC 2 Type 2 Audit Report

- Information Security Policy

- Pentest Report – Connector Hub

- Pentest Report – Uplevel SaaS Product

- Data Flow Diagram

- Business Continuity Plan

- Incident Management Policy

- Disaster Recovery Plan

- Security Incident and Breach Notification Policy

- Implementation Handbook

- Implementation FAQs

- Privacy by Design and Data Deletion Policy

- Security FAQs

Sample Data Outputs

- Github Pulls

- Github Commits

- Github Pull Comments

- Bitbucket Commits

- Bitbucket Pulls

- Gitlab Changes

- Gitlab Commits

- Gitlab Merges

- Jira Change Log

- O365 Calendar

- Google Calendar

- Microsoft Teams

- Slack

Questions?

Contact our team at security@uplevelteam.com.